Assessing Assessments

With the policy focus on foundational learning and increased emphasis on data-based decision making, the debate has shifted from ‘To assess or not to assess’ to how to utilise assessments to drive learning (Chan et al, 2021). This piece aims to introduce the different approaches to assessments and the purposes they serve using the Government of Tamil Nadu’s flagship Ennum Ezhuthum programme (EE) as an exemplar.

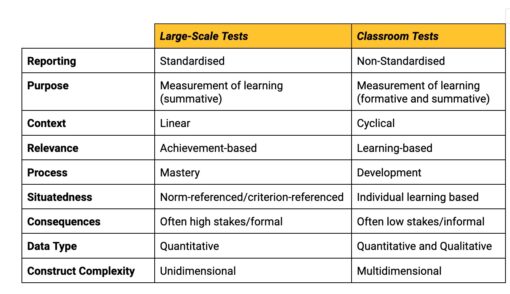

Assessments are often classified broadly into two types: school-based assessment and large-scale standardised assessments. The difference between the two modes of assessment has been succinctly captured in this table from Wesolowski (2020):

Figure 1.1: Table reproduced from Wesolowski (2020)

Prior to the launch of EE, regular practice in government schools was for teachers to put together the question papers and the overall performance of students not being tracked (except within the school). Assessments served an extremely limited purpose as it did not inform instruction or provide insights for teacher training or curriculum design. While comprehensive and continuous evaluation (CCE) was deemed integral through section 29 of The Right of Children to Free and Compulsory Education Act, 2009, the mandate needs to be contextualised and implemented effectively. The EE programme seeks to build on this mandate by focusing on interdisciplinary learning, experiential learning, and 21st century skills (as defined in Joynes et al, 2019). Hence, the themes referred to in CCE are fine-tuned, contextualised and made tangible, visible and achievable at scale through the EE programme. It builds upon the Constructivist Learning Theory which states that learners construct knowledge for themselves instead of taking in information passively.

Upon the launch of the EE programme, three types of assessments have been taking place in government schools since 2022: two types of Formative Assessments and a Summative Assessment. Formative A refers to assessments wherein students are assessed based on the projects or activities that they do; while in Formative B, students are assessed on the content that they have been taught. Formative B happens every week, wherein the idea is to test the students on topics covered in that particular week. Summative assessment refers to an assessment where students are assessed at the end of the term (quarter) on the entire portion taught in that term. An interesting lens to understand the role of these different types of assessments is Stobart’s (2008) assessment for learning (AfL) and assessment of learning (AoL). In their study on assessment in the digital age in the early childhood classroom, Neumann et al (2019) equate AfL with Formative Assessments while AoL is equated with Summative Assessments. As per Neumann, the assessments are meant to serve different purposes and the distinction between AoL and AfL is further elaborated upon below:

Through the EE programme, the benefits of both school-based assessments and large-scale testing are sought to be leveraged by conducting large-scale, teacher-led testing in classrooms for students in grades 1, 2 and 3. This assessment includes components of AfL and AoL by virtue of the fact that both summative and formative assessments are carried out. As the digital tool is utilised for testing, learning outcomes from the weekly formative assessments can be used to make modifications to lesson plans and even provide student-specific insights to assist teachers.

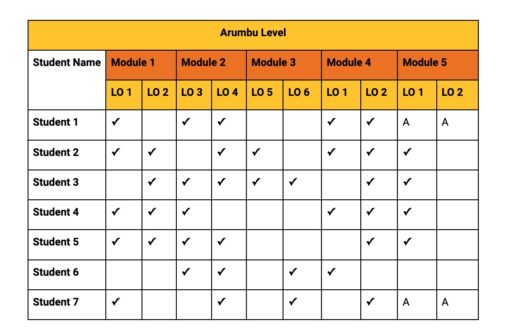

For example, below is a snapshot of the learning journey chart as envisaged for the EE application to provide an example of the usage of data collected by way of Formative assessments. Through data collected on performance in formative assessments as illustrated in the figure below, the teacher may draw an inference that Student 4 needs to focus on LO4 or that the entire class has performed poorly on LO 5 which may need remediation.

Figure 1.2: Table contains fictitious data, for illustrative purposes only

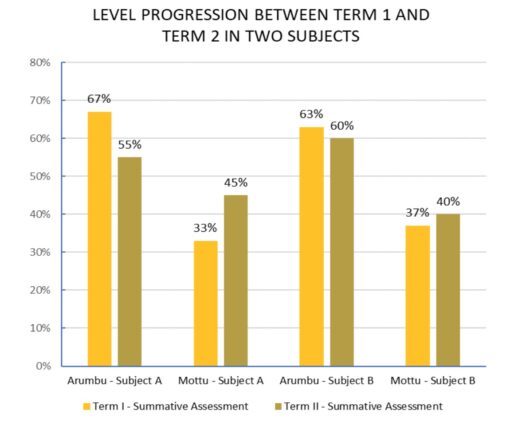

Summative assessments, on the other hand, can be used to glean larger insights, gauge performance and track progress overall. The programme focuses on level based learning wherein Arumbu equates to learning at the level expected of a Class 1 student, Mottu equates to that of a Class 2 student and so on. The student workbooks are designed in such a manner that students use workbooks suited to their level irrespective of their class. Progress between levels is gauged from one summative assessment to the next. The adjacent graph has been created using sample data for illustrative purposes only which does not reflect actual data. It is assumed to be representative of the performance of students in Class 2 in two different subjects. From the graph above, we can draw inferences pertaining to the effectiveness of the methodologies, teacher learning materials, and books wherein Subject A may be assumed to be performing better than Subject B on the basis of progress made from Term 1 to Term 2.

Figure 1.3: Table contains fictitious percentages, for illustrative purposes only

This action of collecting data at a large scale involving over 37,000 government schools with tests being administered by approximately one lakh teachers is a massive undertaking in itself involving multiple challenges. The intersection of technology and assessment is an exciting field where new inroads are being made with researchers experimenting on new and innovative methods of test administration and assessment design. While assessments cannot be deemed a silver bullet that can solve all the challenges impeding foundational literacy and numeracy, it can nevertheless help in guiding instruction and remedial intervention at the school level and evidence-based policy making at the systems level.

References

Cecilia K.Y. Chan; Nai Chi Jonathan Yeung, (2021), To assess or not to assess holistic competencies – Student perspectives in Hong Kong, Studies in Educational Evaluation, ISSN: 0191-491X, Vol: 68 c.f. DOI:10.1016/j.stueduc.2021.100984

Joynes, C., Rossignoli, S., & Fenyiwa Amonoo-Kuofi, E. (2019). 21st Century Skills: Evidence of issues in definition, demand and delivery for development contexts (K4D Helpdesk Report). Brighton, UK: Institute of Development Studies

Neumann,Michelle M.; Anthony, Jason L.; Erazo, Noé A.; Neumann, David L., (2019) Assessment and Technology: Mapping Future Directions in the Early Childhood Classroom , Front. Educ., 18 October 2019, Sec. Assessment, Testing and Applied Measurement, Volume 4 c.f. https://doi.org/10.3389/feduc.2019.00116

Schildkamp, Kim & van der Kleij, Fabienne & Heitink, M.C. & Kippers, Wilma & Veldkamp, Bernard. (2020). Formative assessment: A systematic review of critical teacher prerequisites for classroom practice. International Journal of Educational Research. 103. 101602. 10.1016/j.ijer.2020.101602.

Schildkamp, K., & Kuiper, W. (2010). Data-informed curriculum reform: Which data, what purposes, and promoting and hindering factors. Teaching and Teacher Education, 26, 482–496 c.f. https://doi.org/10.1016/j.tate.2009.06.007

Stobart, G. (2008). Testing times: The uses and abuses of assessment. London: Routledge

Wesolowski, Brian. (2020). Validity, Reliability, and Fairness in Classroom Tests, c.f. DOI:10.4324/9780429202308-5